Pt. 1 - Exploring Virtual Production

Spring 2021 - LSU DMAE and Digital Art spearheaded a grant application for the development of an all-new Virtual Production program and XR (extended reality) studio. Our project was a proof-of-concept for LSU’s application to the Louisiana Economic Development fund.

See our Git Repository for more details.

The Mustang Garage

In the midst of the ongoing Covid-19 lockdown, our team of 3 graduate students and DMAE faculty Marc Aubanel started exploring basic camera tracking in Unreal Engine using Steam. My contributions to this project included setting up our hardware pipeline, compositing, and video work including camera motion capture.

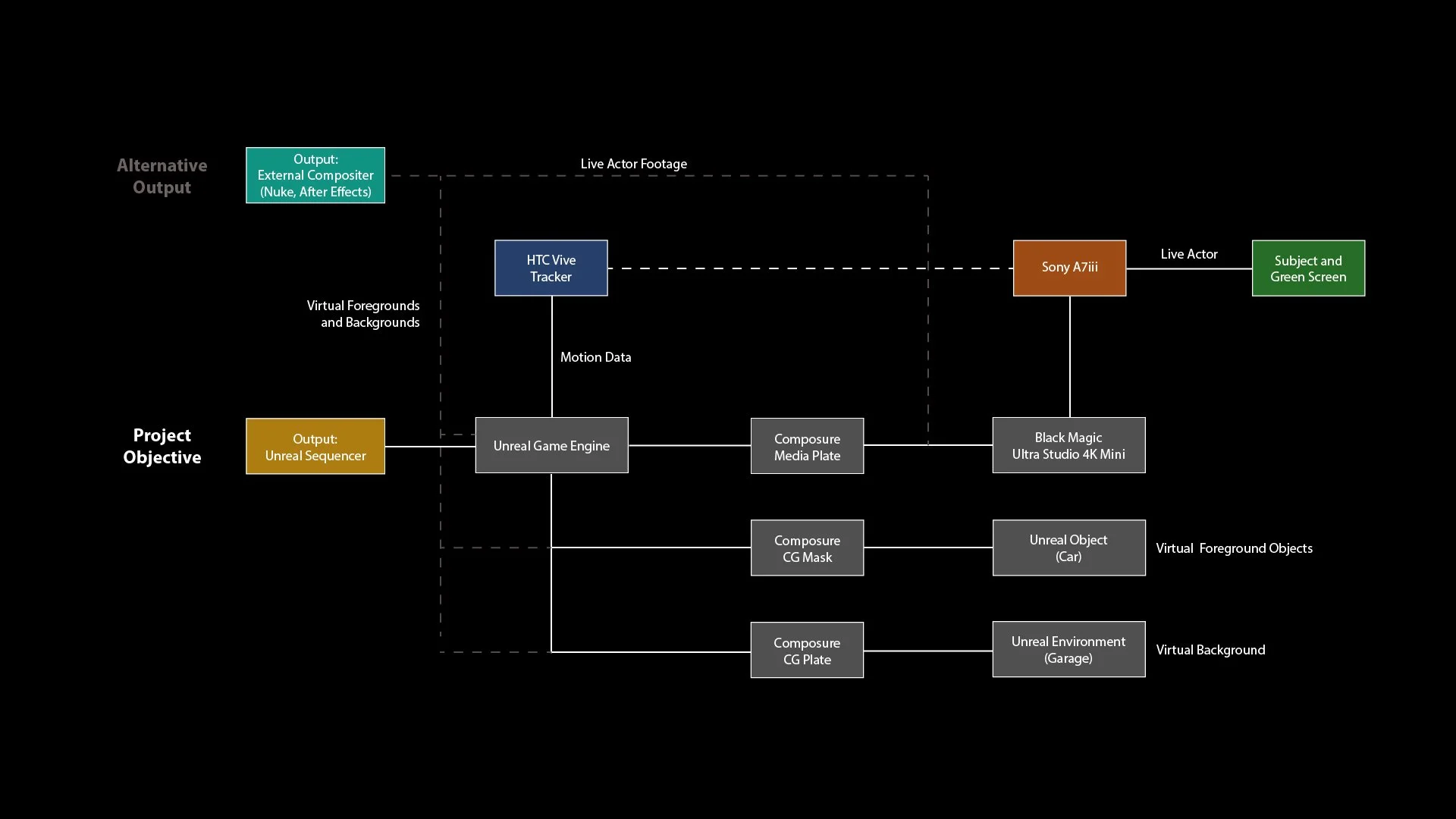

Most scenes are composited directly in UE4 Composure, and exported directly out of Sequencer. Live actor was filmed using a Sony A7iii with camera motion tracked provided by an HTC Vive. Video was captured and imported into Unreal using a Black Magic Ultra Studio 4K Mini.

Primary Focus: Developing Hardware and UE4 Composure Workflows

In our first exploration of using UE4 as a VP tool, we found it to be capable but not always intuitive. Additionally, Composure was acceptable for previewing layers in scenes, but insufficient for presentation.

Our other major challenge with this basic setup involved camera tracking. The HTC Vive system experienced pairing issues with UE4, and (without proper syncing) provided a “floating” sensation between physical and virtual layers.